The first generation of network verification research, or Network Verification 1.0, has been tremendously successful. It has produced a wide range of methods that enable strong guarantees about important aspects of large, complex networks. Unlike common tools such as ping and traceroute, which provide visibility into the experience of individual flows, verification tools can check the behavior for all possible flows even if that set has billions of flows. Today, most large cloud providers are using this technology to ensure high availability and security.

However, outside of the large cloud providers, the vast majority of networks are still being built and operated without automated reasoning and with all of the availability and security risks that go with such an operation. We argue that the goal of the next generation of network verification research, or Network Verification 2.0 should be to make network verification tools as prevalent as ping and traceroute. Just as no complex hardware or software artifact is built today without rigorous testing, no network should be built without rigorous verification.

Meeting this goal requires addressing different challenges than the ones we have addressed thus far. Network Verification 1.0 addressed two challenges 1) formulating the verification problems in a way that balanced utility (find interesting bugs) and tractability (in a reasonable amount of time), and 2) developing analytical techniques to efficiently solve those problems. These techniques are sufficiently powerful that they are not what is holding back network verification from mainstream adoption. Instead, what impedes adoption today is how easily network engineers can use the tools and how easily tool authors can extend analysis to adjacent functionality not already covered such as link layer protocols, virtual networks, etc.

Thus, Network Verification 2.0 must address these challenges: 1) making it easy for network engineers to consume network verification; and 2) making it easy to extend verification tools to cover more network functionality. We explain these challenges below and outline a solution approach inspired by the domain of software analysis.

The challenge of consuming network verification

Verification is difficult to consume for network engineers today because its input-output abstractions as well as goals are different from what they are familiar with. The input to common tools such as traceroute is a concrete flow (i.e., a starting point and packet headers). In contrast, verification inputs are (large) sets of flows. Precisely specifying such sets is hard because of the unwritten assumptions inside many real networks. Consider the task of verifying that “no packet from A to B is dropped.” A natural way to verify this behavior is via the invariant that all possible packets that start at A, with B as their destination address, reach B. However, this invariant can legitimately fail in a network that is configured to prevent source IP spoofing, or prevents packets for certain blocked applications (e.g., telnet), or blocks packets to/from IP addresses that are deemed malicious by an external service. The precise invariant that engineers need to specify is thus complex and possibly also time-varying. We need to make it easier for engineers to specify such invariants.

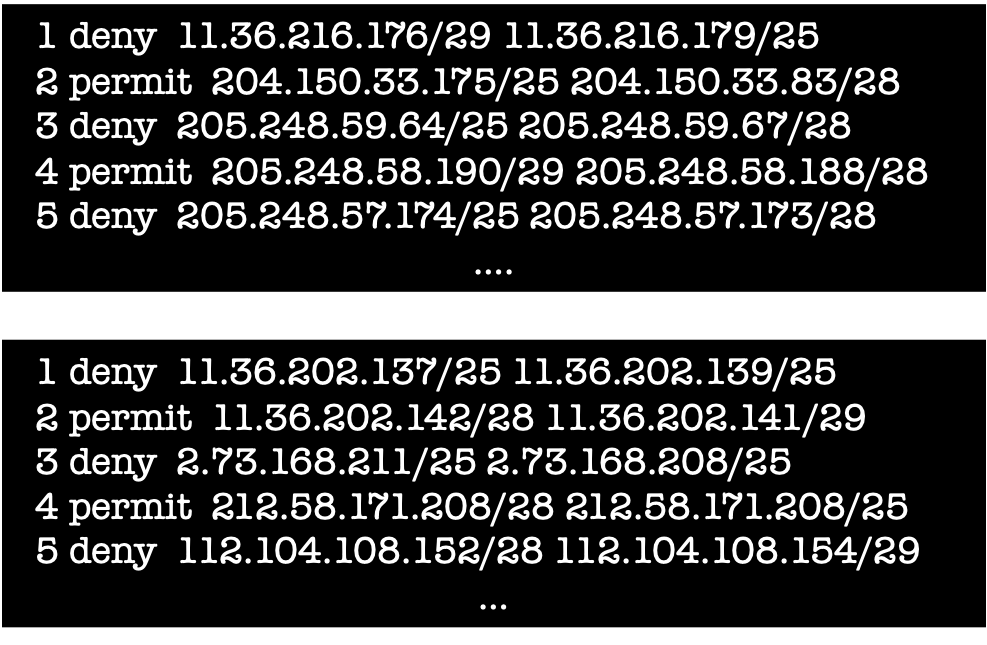

Similarly, the verification tool output may be large sets of flows that are dropped from A to B. Communicating such sets, which may be a complex combination of multiple header fields, is tricky. For example, the path from A to B may contain a number of access control lists (ACLs) similar to the ones shown below, the ACLs may have 100s of lines, and may filter on more than just the source and destination addresses.

Communicating complex spaces via (a few) examples is a possibility but can be misleading. If we pick a source IP that is not in the network, the engineer may conclude that the discovered violation is irrelevant. In reality, the source IP was just an example and in-network IPs (not picked as an example) may also lead to invariant violations. We need to fundamentally rethink how the complex output of verification tools can be effectively communicated to engineers.

Finally, consider the goals of common tools today versus verification. While traceroute is often used to diagnose reported problems, verification is intended to proactively ensure the absence of problems. When diagnosing reported problems, the engineer knows when their job is done. However, when proactively protecting against (unknown) problems, how do they know when they are done? Verification is not an on/off switch, and verified networks can fail too. We have seen incidents where networks that use verification still suffered outages despite the bug being detectable via verification. The engineers did not have an invariant in their verification test suite that would have flagged that bug. To prevent such outages, engineers need feedback on how well they are using network verification and help them use it more effectively.

The challenge of extending network verification tools

Network verification tools are engineered as monoliths today. They have a custom model of the target network functionality (e.g., packet forwarding) and an analysis engine that is intimately tied to the model. This coupling makes it difficult to extend network verification to more functionality that we may know how to verify analytically but the tool does not support (e.g., packet forwarding with a new type of transformations or virtual networks with underlay and overlay forwarding). Such extensions require one to develop or modify both the model of network functionality and the analysis engine. This is a tough task because of the amount of work and the broad expertise needed in networking as well as formal methods. This difficulty makes verification hard to employ in networks where key functionality is even slightly different from the ones targeted by an existing tool.

We have witnessed this difficulty first hand with tools that we have helped develop. At Microsoft, our engineers have developed tools to verify the safety of changes to access control lists, network security groups, and forwarding table rules. Each time we had develop a new tool from scratch despite the similarity of these functionalities because extending the prior tool was no easier than building a new one. Similarly, extending Batfish over the years to support more network functionality or additional router vendors with slightly different semantics has required significant changes to the analysis pipeline.

The monolithic approach was helpful for the first generation of network verification tools because the tight integration between network functionality and analysis enabled faster iteration. However, this tight coupling is no longer necessary and we can decouple network modeling from analysis. Network functionality can be modeled in a common language and analysis engines can target the language instead of specific functionality. That way, extending verification to new functionality only requires modeling that functionality. Once that is done, one or more analysis engines become available to analyze that functionality.

A linguistic approach

There are multiple ways to tackle the Network Verification 2.0 challenges above, but a linguistic approach is particularly appealing (which we are exploring; see here and here). To make it easy for engineers to specify inputs, along with their networks’ unwritten assumptions, we should design an invariant language that can naturally express sets of values and set operations like unions and differences. We may consider a language that blends SQL-like queries over a data model of the network and regular expressions over path constraints.

A high-level invariant language over a well-defined data model also makes it easy to track which network elements (e.g., devices, interfaces, paths) are “covered” by an invariant. This basic capability can then be the basis for providing feedback to network engineers about how well their invariant set covers the network. This is similar to how test frameworks for software can quantify the extent of software coverage, though we will need custom measures for network coverage given the differences in the two domains (e.g., a network is not a sequence of statements or basic blocks, which is the model employed by software test frameworks).

Finally, we should look toward intermediate verification languages such as Rosette, Boogie, and Kaplan to engineer network verification tools to be easy to extend. These languages allow multiple source languages to be compiled to them and various analysis approaches then become accessible, without the need for inventing source language specific analysis tools. In a similar manner, we could translate different network functionalities into an intermediate language and develop a common set of analysis tools for this language. Then, verification can be easily extended to any functionality that can be translated to this intermediate language. Of course, for this approach to be practical, we need to design an expressive intermediate language for which engineers can develop efficient analyses.

Summary

The next generation of network verification research must enable broad use of verification tools such that all networks are rigorously analyzed and they become indispensable to engineers like ping and traceroute are today. We can achieve this goal by making the tools more accessible to engineers on the ground and easy to extend to adjacent functionality. Solving these challenges will radically improve the security and reliability of the networking infrastructure that is critical to modern society.

Ryan Beckett

Ryan Beckett  Ratul Mahajan

Ratul Mahajan